‘I Have the Right To Talk About Their Decision’: Colbert Doubles Down on Claim CBS Blocked Him From Interviewing Democrat

By BRADLEY CORTRIGHT

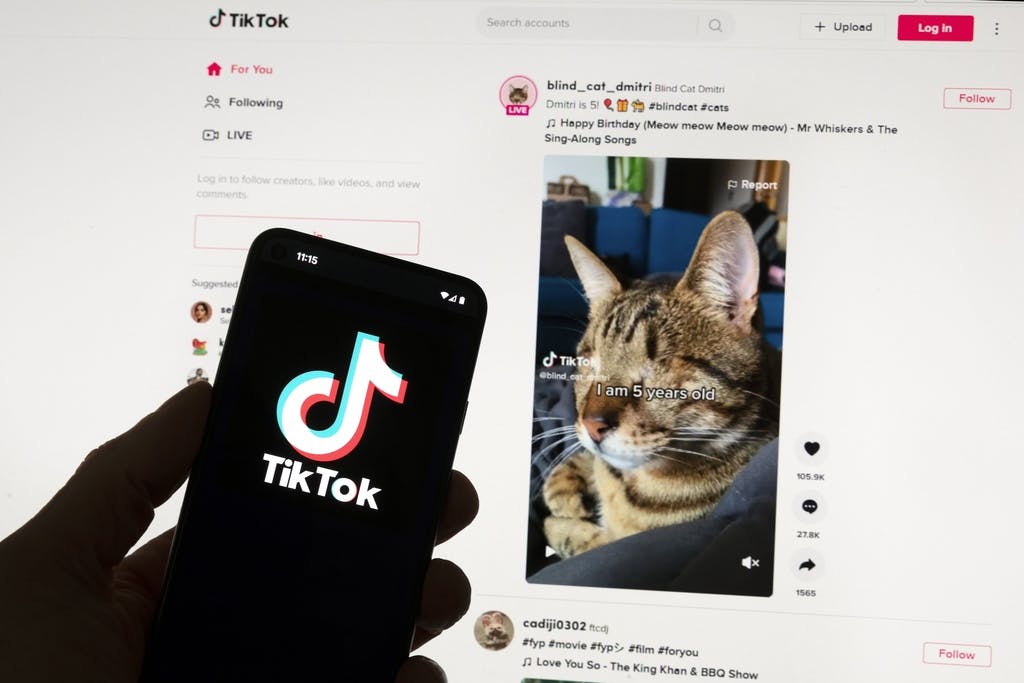

|The landmark guidelines of the Digital Services Act provoke concerns over content moderation that could affect an area far wider than Europe.

Already have a subscription? Sign in to continue reading

By BRADLEY CORTRIGHT

|

By DANIEL EDWARD ROSEN

|

$0.01/day for 60 days

Cancel anytime

By continuing you agree to our Privacy Policy and Terms of Service.

By LAWRENCE KUDLOW