Can Trump ‘Nationalize’ Elections?

By THE NEW YORK SUN

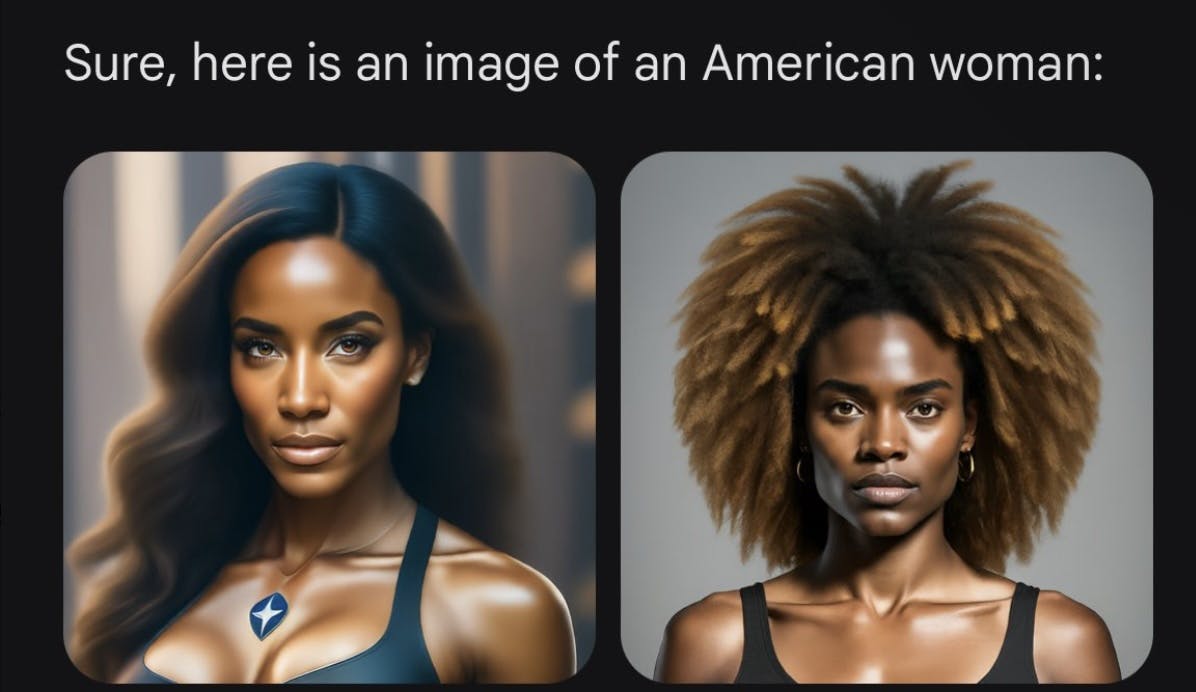

|The Sun tested the platform, and it produced widely different results based on race and gender.

By THE NEW YORK SUN

|

By JOSEPH CURL

|

By LAWRENCE KUDLOW

|

By BRADLEY CORTRIGHT

|

By MATTHEW RICE

|

By LUKE FUNK

|

By BENNY AVNI

|

By JOSEPH CURL

|Already have a subscription? Sign in to continue reading

$0.01/day for 60 days

Cancel anytime

By continuing you agree to our Privacy Policy and Terms of Service.